How to determine the colour rendition of the monitor by characteristics?

We independently test the products and technologies that we recommend.

The dependence of color reproduction on the type of matrix

|

Any talk about the monitor's ability to accurately display colors should start with matrix types.

Most TN matrices fall short when it comes to displaying colors. Their forte is fast response and low cost.

VA screens can be put up a notch, but their color accuracy isn't perfect either. However, recently VA monitors for designers with good viewing angles, natural color reproduction and price tags slightly lower than IPS have been increasingly appearing on the market.

IPS is the best in this regard: they boast not only accurate color reproduction, but also a wide dynamic range, coupled with optimal brightness and contrast. All these are also important parameters that affect the perception of color. That is why designers prefer to work on IPS monitors.

PLS is an "advanced" variant of IPS that Samsung is developing. In fact, there is no convincing evidence of the advantage of PLS over IPS, and, unfortunately, we have not seen two 100% identical monitors with such matrices for head-to-head comparison.

Color depth and bit depth of the monitor

|

Most of the average monitors that we have at home or at work use the classic 8-bit matrix.

First, let's take a look at the beats. A bit is a bit of a binary code that can take one of two values, 1 or 0, yes or no. Speaking of monitors and pixels, if it were a pixel, it would be completely black or completely white. This is not the most useful information to describe a complex color, so we can combine a few bits. Every time we add bits, the number of potential combinations doubles. One bit has 2 possible values, actually zero and one. In two bits, we can already fit four possible values \u200b\u200b- 00, 01, 10 or 11. In three bits, the number of options grows to eight. And so on. The total number of options is equal to being two raised to the power of the number of bits.

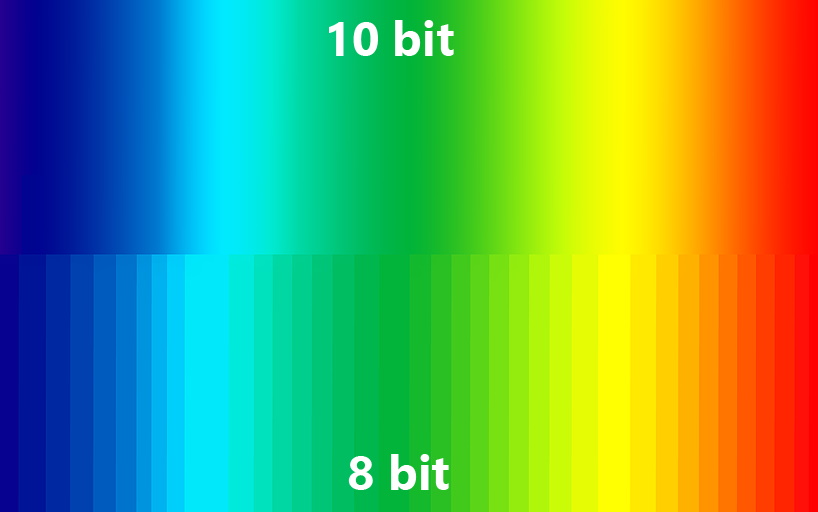

In fact, "bit depth" determines the amount of hue change that a monitor can display. Roughly speaking, a metaphorical monitor with two-bit color can only display 4 shades of basic colors: black, dark gray, light gray and white. That is, he can show the colorful paintings of the Impressionists only in the “shades of dirt in a puddle” mode. The classic 8-bit matrix displays 16.7 million shades, while the professional 10- bit matrix produces over a billion shades, ensuring maximum accuracy and detail in the color palette.

This is how a black to white gradient will look at different bit depths |

What is FRC and pseudo 8 and 10 bit matrices?

|

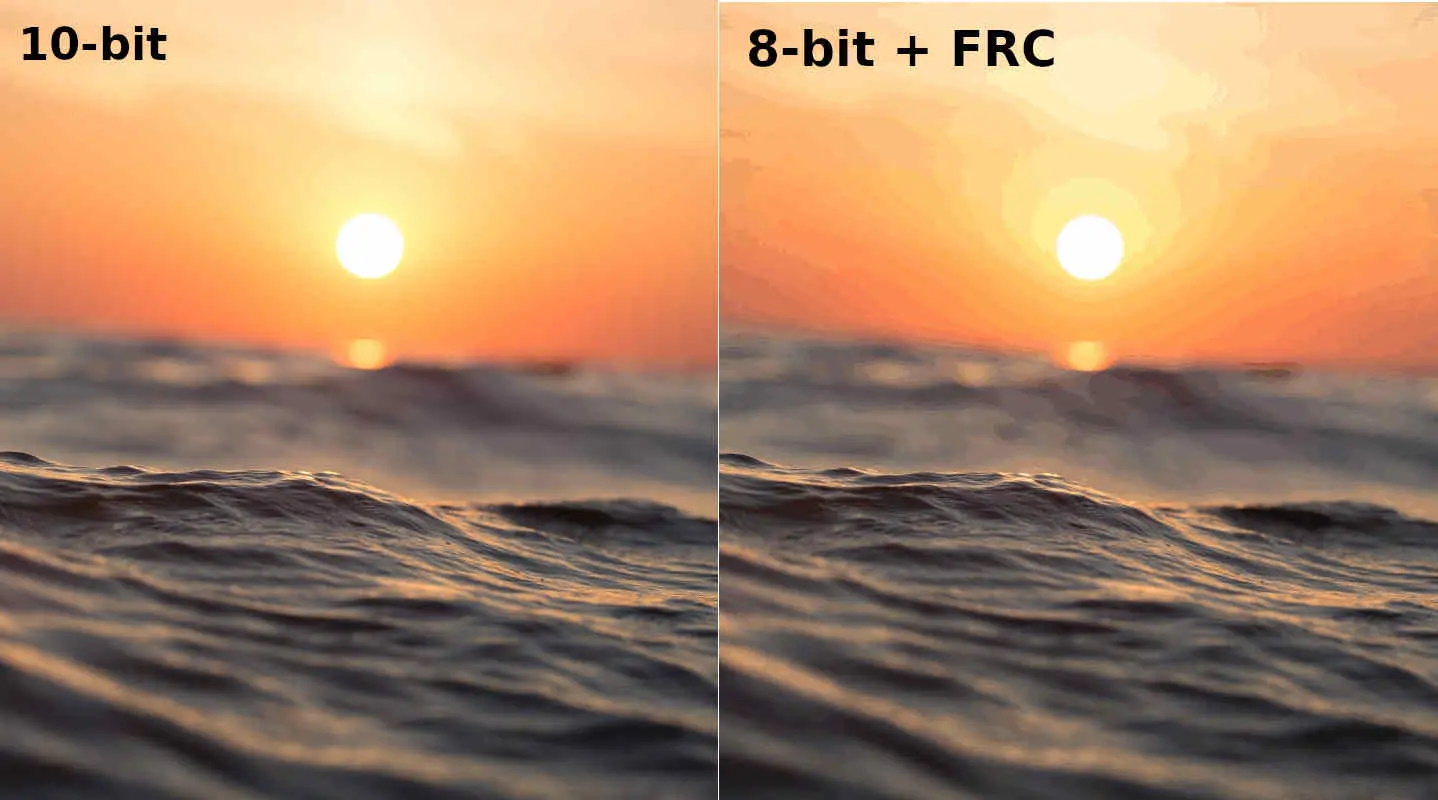

Great, we kind of figured out the bitness, but what is FRC? In the passport data of monitors, a characteristic in the spirit of 6 bits + FRC or 8 bits + FRC is often found. This is a trick that allows you to achieve greater color depth on LCDs without increasing its bit depth. It allows you to increase the number of displayed shades by changing the brightness of a subpixel frame by frame, so that the eye will perceive the same color as a whole palette of its shades. Such tricks allow the monitor to display the missing colors using the available palette, and a conventional 8-bit matrix can display a whole billion colors, characteristic of 10 bits, instead of its usual 16 million.

If we translate this conversation into the “so what to take?” plane, then we advise you not to save on 6bit + frc matrices, since they cost plus or minus, just like regular 8-bit monitors. If you are not an esthete and do not have an eagle vision, then such a matrix is enough for everyday work, games and multimedia. Well, it is advisable to fork out for 10-bit displays if:

- Are you a designer/artist?

- Are you a gamer with high hardware requirements?

- you have extra money

How many bits does a monitor need?

|

As practice shows, not every human eye sees the difference between an 8-bit and a 10-bit matrix. Especially if something dynamic happens on the screen, for example, about a dozen sports cars painted in team colors rush along a sun-drenched track. Prior to the advent of the HDR format, 8-bit images were considered the standard, and this format was used in Blu-ray players, game monitors, and regular office or home monitors.

However, modern panels, especially the now popular OLED TVs, are capable of displaying many more shades, gradients and colors than 8-bit sources allow. At the time of this writing, HDR in its original sense is sharpened precisely for 10 bits. However, the label "HDR Ready" is now hung all over the place, and there are a lot of models of HDR monitors with 8-bit matrices on sale, which basically just twist the gamma and contrast.

It simplifies the fact that the bitness of the monitor is strongly tied to its price. Simply put, 10-bit HDR matrices are predominantly in the premium segment and start at $500 per monitor. As a rule, these are 27 and 32-inch models designed for demanding gamers, designers and photographers. The 8-bit + FRC + HDR class is dominated by 27-inch monitors with a slightly more affordable price tag. If you look for the “8-bit + HDR” option, then you can meet $200-300 for a 24-inch model.

It is difficult to advise something specific here, since everyone has different capabilities and requirements. We only note that for the last few years, most people, including us, have used ordinary 8-bit monitors for games, and for multimedia, and for work, and no one has died. Fanaticism is useless here, buying a sophisticated monitor is justified only if you clearly understand why it is needed. Otherwise, it may be that the HDMI cable or video card drivers do not support 10-bit color.

Color gamut / color models

|

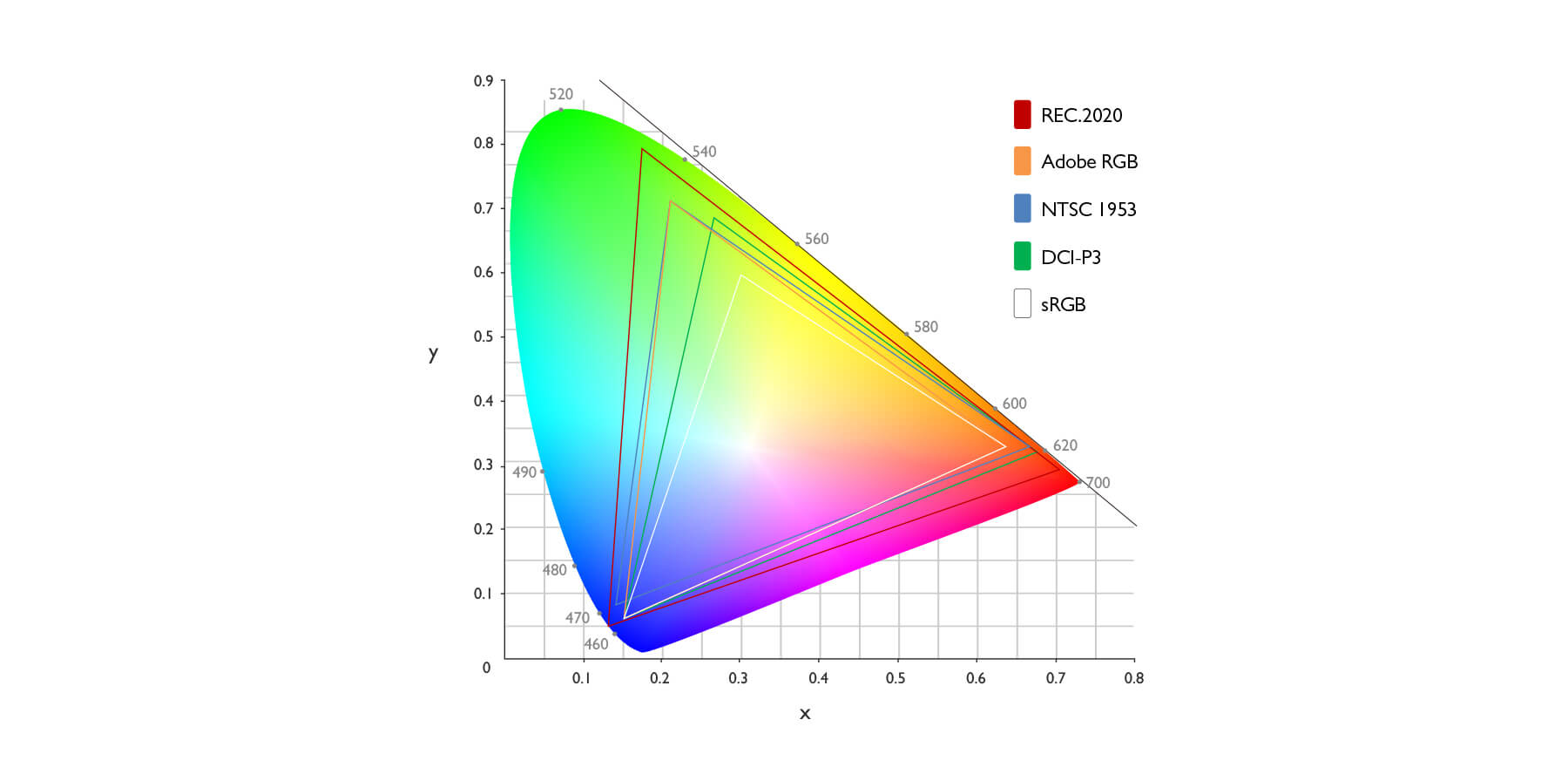

No matter how sophisticated today's technology would be, it is still not able to accurately display all the colors of the real world. Therefore, in the description of monitors, a characteristic like 117% sRGB or 99% NTSC 76% is often found. These numbers show how accurate and wide the color spectrum is capable of displaying the monitor and how high its color reproduction is. It all started with the CIE 1931 chromatic chart, which consisted of all the colors that the human eye can distinguish. It was she who gave rise to other currently popular color models that are used in monitors, smartphones, tablets and other mobile equipment.

sRGB - Developed in 1996 by HP and Microsoft, this standard was used for CRT monitors, printers and the Internet of the time. It was required that the images have the same color gamut and appear the same when printed and viewed on a monitor. Since then, the sRGB color model has become the de facto standard for modern monitors: it is used in the design and manufacture of most video cards. If you are looking for a monitor to work with graphic content, then you need to focus on coverage of at least 100% in sRGB. In all other cases, you can take something simpler, since the expansion of the color gamut affects the cost of the display.

|

NTSC is one of the first color models that is now used more for comparison with older TVs and monitors. Today, the actual coverage of most LCD monitors is about 75% of the NTSC space, but there are models with improved backlights that cover about 97% of the NTSC color space.

The Adobe RGB model was invented for use in a printing house, so its color range of colors matches the capabilities of printing technology. To successfully work with materials in the Adobe RGB spectrum, you will need its support from both the monitor and the software. Therefore, looking towards a monitor with a wide gamut of colors according to Adobe RGB makes sense, first of all, for those who are professionally engaged in layout and design of high-quality printing.

Model DCI-P3 came to us from the world of home theaters. This is a professional color model, which is now used mainly to show the universal coolness of the display. DCI-P3 covers more colors than standard sRGB, so 98% coverage guarantees the monitor's color reproduction. According to many pros, in the near future this standard will replace sRGB as the new standard for digital devices, websites and applications. If only because it is actively promoted by such giants as Sony, Apple, Google, Warner Bros and Disney. But since such a technique now costs a lot of money, it is used primarily by professionals working with video content.

What is the difference between bit depth and gamut

|

Perhaps at this point you have a question, what is the difference between bit depth and color gamut? This is easy to understand from the picture above. By increasing the color depth, we reduce the risk of sharp transitions between two shades. With gamut expansion, the monitor can display more extreme colors. Understanding this fact is more important for yourself, since monitors with low bit rates and wide color gamut do not exist on the market. As well as reverse examples with high bit depth and low color gamut.

Pantone and CalMAN certified monitors

|

Pantone and CalMAN certifications will be of interest primarily to professional designers, artists and colorists, but it would be wrong to bypass them.

In 1963, Pantone turned the game around by offering printers around the world a uniform standard for color reproduction. It includes 40 base colors and more than 10,000 derived shades, each with its own name and the famous Pantone color card. Since then, the Pantone scheme has been actively used in the printing industry, graphic design, fashion industry, architecture and a bunch of other areas that require accurate color matching at all stages of work. Actually, in response to these requirements, monitors appeared that reflect the Pantone color scheme with 100% accuracy so that the layout designed on the designer's monitor looks the same in the print shop.

In turn, CalMAN is a display calibration solution from the well-known Californian company Portrait Displays. With Pantone historically associated with print, the CalMAN standard is the choice of most film, television and post-production professionals.

Articles, reviews, useful tips

All materials