Not a single gap: what is FreeSync, G-Sync, VRR, VSync and how do they differ

We independently test the products and technologies that we recommend.

What is vertical sync and how does it help in games?

|

To begin with, a small educational programme about the now fashionable hertz and refresh rate. The information that the display receives from the video card is in constant dynamics, and the pixels are updated at a certain frequency. The number of such updates per second is called the sweep rate and is measured in Hertz. Classic IPS and VA matrices, which have been widely used in the production of monitors, TVs and smartphones over the past decade, had a fixed refresh rate of 60 Hz. But over the past couple of years, the ice has broken: smartphones with a 90-Hz screen have fallen on sale, 144 Hz has become the norm for gaming monitors, and modern TVs have accelerated to 120 Hz to meet the requirements of new generation game consoles.

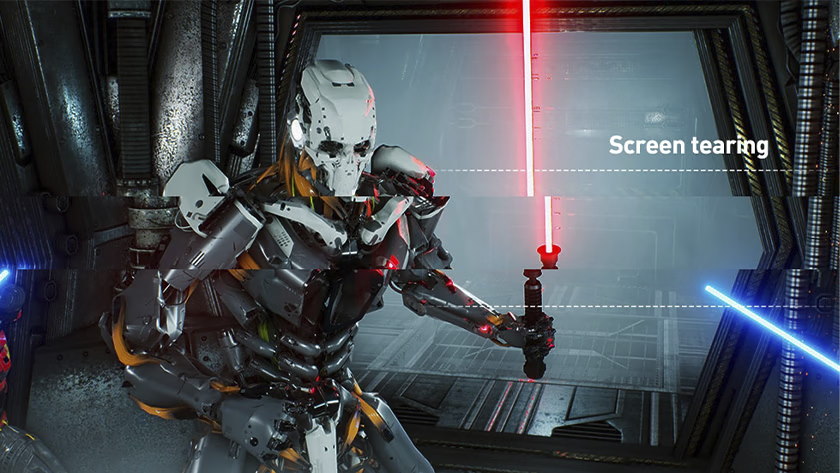

And if the display has a constant frequency, then the world of the video card is a little more complicated. Imagine how the Witcher Geralt from the game enters a dark cave. There are a couple of torches hanging on the walls, it stinks of something sour and nothing is visible. The video card is resting, it really has nothing to draw. The frame counter almost doubles. Five minutes later, the Witcher fulfills an order to kill a vampire and finds a way out of the cave. The sun hits my eyes, and the video card has to immediately render all the beauty of Toussaint's landscapes. And what do we see? The FPS counter immediately sags. And this happens all the time, because unlike the monitor, the video card has a variable frame rate. So it turns out that in one cycle of updating the monitor, the video card can prepare at least half a frame, at least one and a half. Because of this, the smoothness of the picture suffers and artifacts appear in the form of vertical image breaks and ladders.

Vsync - one-legged father

|

The first intelligible attempt to overcome the desynchronization between the monitor and the video card was a thing familiar to every gamer called “vertical synchronization”. This is a very crude technical option that physically lowered the FPS of the video card to match the refresh rate of 60. Simply put, if your monitor only outputs 60 Hz, and the video card in this game is capable of delivering up to 100 FPS, it will be the scapegoat and it will be equalized to original 60 FPS. And it's a shame, but you can live. The real problems begin when the video card, on the contrary, does not have enough power. Vsync only works with multiples, so it will lower the bar from 60 FPS to 30. In general, ladders are better than that.

Another problem is the high input lag, which makes the gameplay feel like the game's characters are stuck in a jelly, and hitting and shooting occur with a tiny, but very annoying delay. And all because in the process of transmitting a signal from the mouse to the display, the central processor is also involved, which such things should not touch at all. As a result, the processor does not take up the preparation of a new frame until it finishes with the previous one. And we spend an extra half a second to synchronize the video card and the display, for fast games like shooters and racing, this is like death.

In general, this is no longer one elephant in the room, but a whole family of elephants with which something needs to be done. And so the manufacturers of video cards and monitors set out to look for a new solution.

G-Sync: beautiful, but expensive

|

The first successful attempt to solve the problems of vertical synchronization was the G-Sync hardware and software system, created by the main video card manufacturer today, NVIDIA. Unlike the outdated VSync, G-Sync went the other way and taught the monitor to change its frequency to the video card, and not vice versa. Considering that the technology is able to work with frequencies from 30 to 360 Hz, this fact gives a free hand when choosing the optimal frame rate. Well, if the frame rate drops below 60 Hz, then G-Sync simply duplicates the missed frames. In dynamics it is completely imperceptible.

And here is the time to ask why we are discussing this issue at all, if NVIDIA decided it back in 2013? How to say. Firstly, this technology is closed, so for a long time it worked only with video cards from NVIDIA. Secondly, for G-Sync to work correctly, the monitor must be equipped with an appropriate module for vertical synchronization. Naturally, these modules cost money, and you can buy them only from NVIDIA itself. As a result, a markup of $200 per monitor and the status of a niche product for wealthy fans of NVIDIA products.

Versions of G-Sync

|

Until 2019, Nvidia went its own way and limited its own graphics cards in G-Sync support. The fact is that by this time a popular free alternative appeared on the market in the face of Adaptive-Sync technologies from VESA and FreeSync from AMD. In 2019, NVIDIA went to meet fans and launched the G-Sync Compatible programme. In fact, they sewed VESA Adaptive Sync support into the drivers of their video cards and G-Sync chips (we will talk about it below). Thanks to this, owners of monitors with G-Sync and Radeon graphics cards were finally able to use normal frame synchronization. True, NVIDIA immediately warned that G-Sync Compatible monitors do not pass additional quality and compatibility tests, so their overall picture quality may be slightly lower, and NVIDIA itself does not give any guarantees that a monitor with G-Sync will work. work perfectly with Radeon graphics cards.

Following G-Sync Compatible, NVIDIA representatives in the same year announced several more important innovations. So synchronization from NVIDIA is now supported by laptops with mobile video cards. And the standard itself has learned to work with HDR content and expanded the color gamut of the DCI P3 triangle. In order not to confuse people, the new protocol was called G-Sync Ultimate.

VESA Adaptive Sync and the implementation of normal vertical sync

|

In 2014, the Video Electronics Standards Association or VESA introduced the open Adaptive Sync technology, which can, in principle, do everything the same as G-Sync (about, but without expensive chips and operates at frequencies from 9 to 240 Hz! But for the implementation of the technology, it is necessary that its support be implemented in the monitor driver, video card driver, operating system and games!And you also need to have a DisplayPort version of at least 1.2a, since the technology has become part of DisplayPort.Realizing that in order to implement a new standard the efforts of many people around the world are needed at VESA to share their work with partners, the most successful of which have been achieved by video card manufacturers - NVIDIA and AMD.

FreeSync: People's Synchronization

|

A year and a half after the release of G-Sync, AMD responded with FreeSync. Like G-Sync, the brainchild of AMD is not tied to outdated hertz standards and works more precisely. To simplify, FreeSync automatically detects the monitor's refresh rate and compensates for the number of missing frames by generating additional frames. And if in movies and TV shows such drawing creates an unpleasant “soap opera” effect, in games artificially inserted frames are not visible to the eye and do not create discomfort.

Unlike G-Sync, which required an NVIDIA graphics card and a monitor with a physical G-Sync module, FreeSync works entirely in software and is free. The fact is that all monitors have a special circuit called "scaler" in their composition, which controls the connection between the GPU and the LCD panel. This circuit is a mandatory element of the monitor, which provides audio and video signal transmission. NVIDIA decided to develop and sell its own “scaler” to monitor manufacturers, AMD went the other way and agreed with leading scaler manufacturers like MStar and Realtek to add FreeSync support to existing and new modules at the software level. It turned out so easy and cheap that this feature has become an industry standard and it is now more difficult to find a gaming monitor without FreeSync than with it.

FreeSync: features and versions

|

Initially, AMD used the developments of the VESA Adaptive Sync synchronization standard, but they did not stop there. A year after the release, FreeSync learned to work with HDMI (note: before everything worked only via DP), and in 2017 the second version of FreeSync 2 entered the market with support for HDR content and a low frame rate compensation system, like in G-SYNC. A little later, AMD decided that the numbers in the name were confusing and divided FreeSync 2 into two types - FreeSync Premium and FreeSync Premium Pro.

As a result, we got three different versions of FreeSync. The base one was tailored for older monitors with a refresh rate of 60 Hz. In contrast, Premium versions of FreeSync support up to 120 Hz at Full HD resolution. The difference is that FreeSync Premium Pro supports HDR content with a wide color gamut to a heap. If you want to play with HDR, FreeSync Premium Pro is definitely the best choice.

HDMI VRR

|

Looking at the success of AMD and G-Sync, manufacturers of TVs and game consoles decided that such goodness would be useful to them too. As a result, the HDMI 2.1 specifications introduced a thing called VRR (trans: variable refresh rate), which, in a similar way to FreeSync and G-Sync, allows the game source - a game console or computer, to independently deliver video frames at the highest possible speed. VRR waits until the next frame is ready to be transmitted, showing the user the current frame, which provides a smoother picture in the game, without annoying jerks and judder.

VRR is based on the VESA Adaptive Sync technology mentioned above, which has also become part of the HDMI 2.1 standard. This is how adaptive sync is implemented in next generation consoles. And also, you will be surprised, in Xbox One S and One X. Yes, the current generation boxes from Microsoft VRR were delivered even earlier than HDMI 2.1.

So what's even cooler?

|

Both FreeSync, and G-Sync, and VRR are able to adjust the refresh rate of the monitor to the FPS of the video card / console, and not vice versa. And if the frame rate falls below the minimum 60 Hz, then both systems draw the missing frames, duplicating the existing ones. As tests have shown, FreeSync and its sister VRR do not affect the frame rate at all, while G-Sync provides a subtle loss of FPS in the region of 1.2%. However, this difference is completely invisible to the eye and it is not entirely correct to write it down as FreeSync advantages.

And if both technologies are not much different, then why pay more? Whether you like it or not, the G-Sync hardware module costs money, so they put it exclusively in above-average gaming monitors. Basic FreeSync is available in every "iron and kettle", while FreeSync Premium is more common in mid-range monitors. Well , AMD FreeSync Premium Pro is still used in higher-order gaming monitors. As for VRR, you will need a TV and a signal source with HDMI 2.1 support to implement it.

Articles, reviews, useful tips

All materials